Waymark

This story is about development of the multiplatform video player with tone correction, volume control, speed changing and struggle against five-year bugs. We wrote about our own online video editor Pixiko.com. Discussing this editor on different social platforms helped us to find a new client. How'd that happen? Read next.

Pixiko video editor is successfully working right now and attracts new users mostly from the USA. After launching we began to write about it on different platforms like FB, Product Hunt, Indie Hackers, Hacker News etc.

We began to get different messages from companies at times. They offered to be partners or just become acquainted with us. So, once Nathan Labenz, CEO of Waymark, wrote to us. His team was building an online video editor which can quickly make commercial videos for TV in the USA. The USA has a good number of channels, a great audience and many viewers of TV advertising. So, they needed quick production of commercial videos.

We had been discussing our side project during a call. Also, we had mentioned our marketing and development team. He asked about an opportunity to work together and solve some technical tasks for Waymark editor. Then, he sent us technical requirements and we began to carry out a project.

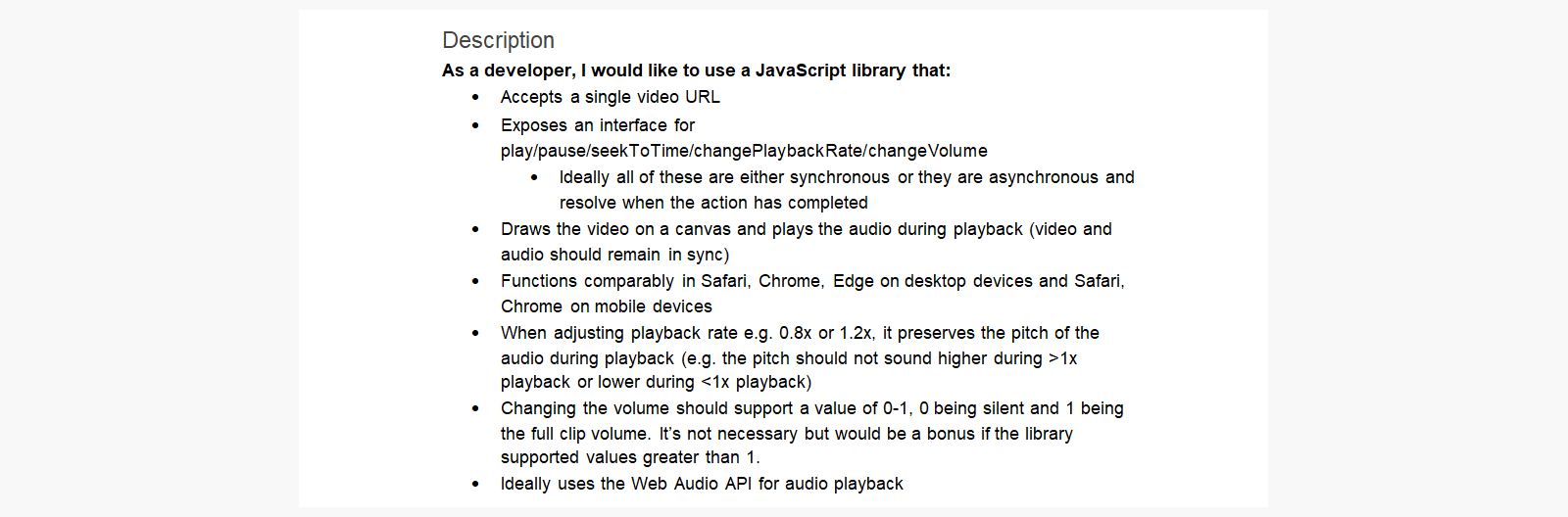

You can see a part of the technical requirements below. We would like to note that all the requirements were satisfied to the maximum. We also performed additional work that arose during the struggle against bugs.

A main part of the technical requirements

The client wanted a player that would work stably in desktop versions of Safari, Chrome, Edge and on mobile devices in Safari and Google Chrome. In addition, it was necessary to keep the audio quality (pitch) of the video while changing its speed. Here is one part of the technical requirements:

At first sight it seemed that we should only make some kind of “cover” under native API. We analyzed and understood that there were two ways to solve the problem. One of them is described in the offer, and another one is quite “funny” and claims to take the buffer and change it by hand. It was quite possible but we didn’t want to use it.

After launching, the number of problems increased. There were bugs in browsers and lack of one sound option on the iPhone. We also found that the voice tone did not work correctly while changing the video speed (preservesPitch worked wrongly). When the playback speed changed, the voice tone also changed and it happened not only on the iPhone. Speeding up the video led to the fact that the voice began to squeak, and slowing down led to the bass. Sometimes, the sound was just turned off. In fact, all of these problems were trifles compared to what IE6 could do (someone might remember that).

Struggle against browsers

The most important task was our struggle against browsers. There were bugs that existed for 5 years. Another one was changing the volume. We should change the native preservesPitch where it didn’t work.

If we change AudioContext, the playbackrate stops working correctly. Standard API with Mozilla Firefox and Safari led to described bugs.

We came to the conclusion that it’s necessary to take an audio track, change it, change the volume, and save the pronunciation tone. However, it was a difficult method.

We supposed that the problem of saving the pitch already existed and didn’t want to code our own solution. That’s why we asked Google about it.

We chose the SoundTouchJS library which works with audio files. It change the tempo without changing its pitch.

However, it couldn’t be used with the current player in Firefox, Safari and mobile Safari because of those bugs.That’s why a voice track was played in parallel with a video but video sound was “0” or muted (in mobile Safari). In order to use the player correctly, we worked over different versions for different browsers.

In the context of optimization, we had to refuse to install Apply MaxVolume for the mobile version of Safari and Firefox (it worked natively for the desktop version). It was possible to change the sound only through the audio context for the mobile version of Safari, but there was a bug similar to the desktop version, so we used the solution mentioned above (parallel sound and creation of a new track).

Why optimization? The buffer turns out to be large for large video/audio, as there are at least 44100 points stored for each second of audio. The middle ear hears frequencies between 20 and 20,000 hertz by the Nyquist Shannon sampling theorem. The frequency for full signal recovery should be twice as high. This data is processed fast enough, but we noticed a slowdown when loading long audio tracks.

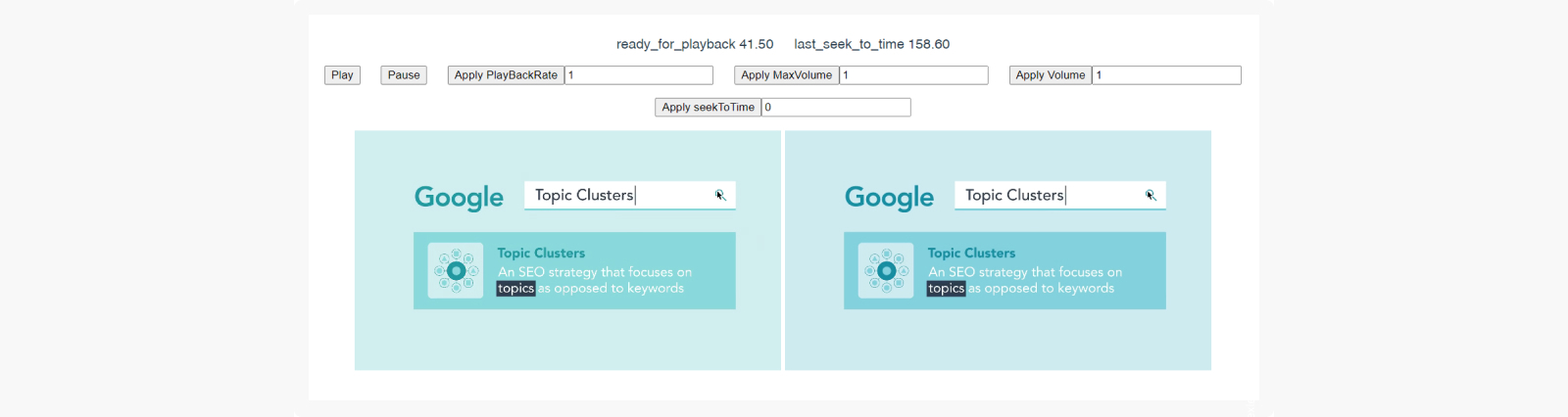

You can see a screenshot of the player version below where we tested everything.

The test passed on the second try. Some of the bugs that were described above were detected just at the intersection of sound and playback speed. After fixing them, everything worked well. By the way, you may notice how the render (on the right) differs from the video image. It is because the browser processes it like this and we see a changed version.